I Wrote a YAML Parser From Scratch in Zig. Here’s What I Learned.

I’m writing a software architecture visualization program in Zig that allows both developers and designer easily navigate a codebase and understand product functionality. Since it combines automatically generated data with human input, I needed a data format that was easy to generate from different languages, yet also easy to edit by hand. So, I went with YAML.

But, Zig is a new language and the various YAML parsers out there didn’t seem to do what I needed, mainly parsing of recursive data structures for the nested diagrams. Since this is a learning project and I was curious to experiment with different techniques to write parsers, I decided to write my own. Specifically, I wanted to see if I could make a zero-allocation parser and what its performance would be.

But did I really want to write a full YAML parser? Of course not! The advantage of writing things from scratch is that you know exactly what you need and can choose the trade-off between features and complexity/speed. So no crazy features and ‘yes’ and ‘no’ are strings, not boolean values.

There were 3 parts I needed to write: the generic YAML lexer, the generic YAML parser and the application-specific parser that turns the parsed YAML into the actual structs my application uses while running. The lexer divides the source file into chunks that are a bit easier to process. So { foo: 5 } would give the tokens “object_start, “whitespace”, “single_line_string”, “colon”, “whitespace”, “integer”, “whitespace” and “object_end”. What I wanted as an end result is “object_entry_start” (storing the start and end indices of “foo” in the source), “integer” and “object_entry_end”. The final high-level parser need to turn this into struct { foo: number }.

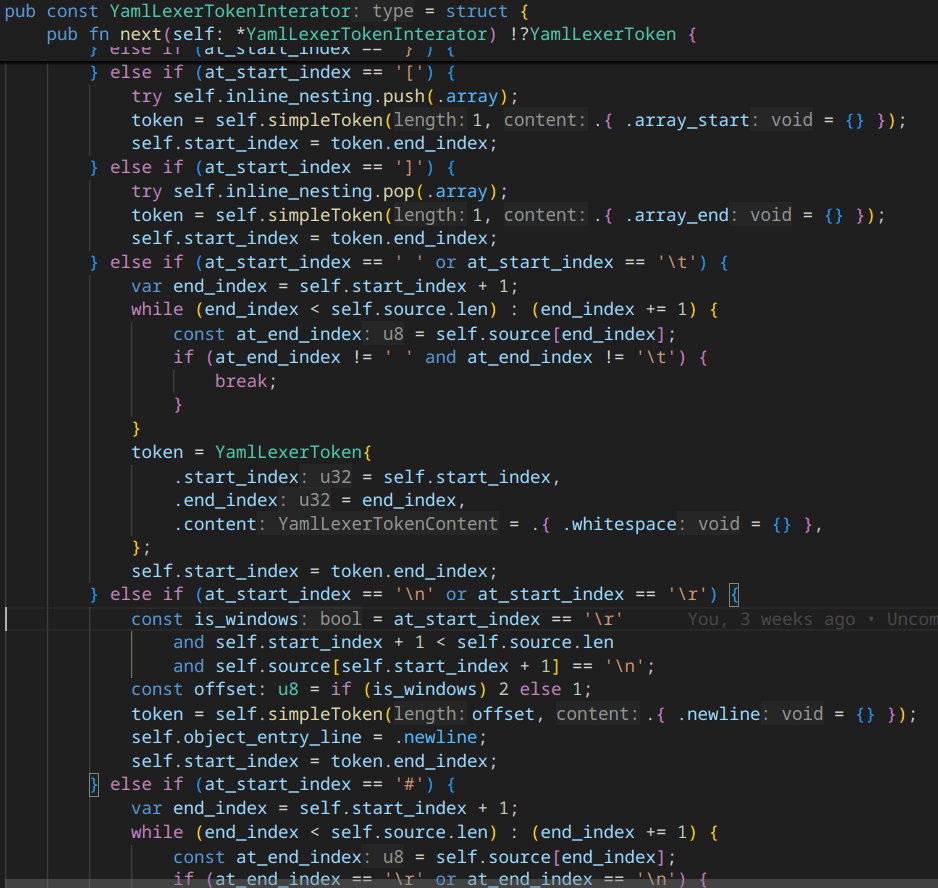

The lexer was pretty quick and fun to write. I store a pointer to the source and the start index of the next text to parse. Most of it was pretty simple, but I had the tendency to do too much in the lexer. I tried to skip comments in the lexer, but corrected that to do it in the parser. But, I actually kept track of how deep I am in arrays and objects, so I could differentiate between object keys and strings, which should’ve been the parser’s job. This introduced some needless complexity like keeping track of deep we’re nesting objects/arrays. But overall, most code looks like this and is pretty easy to follow.

Writing the parser however, was a different story. I shot myself in the foot with trying to it zero-allocation. This introduced a ton of edge cases to deal with. For example, when you encounter a new line in YAML, you might actually be closing multiple objects and arrays at the same time. But since we only return one token at a time, we need to remember whether you have any object/array closes. And there were a few things like that. It would’ve been simpler to use an arena allocator which makes allocation much cheaper and maybe even improved performance because of less branching.

The application-specific parsing was simple and fun again! Because the generic YAML parser doesn’t have any opinion on what you want to parse the data into, I could easily lay data out in a cache-friendly way. For example, instead of storing the tree as nested hash maps, I could use structs of arrays to store nodes in one linear array, their names in another one and when rendering them, I could just iterate iterate over the node array because they are already laid out depth-first.

Now, how performant is this? From my first tests, it parses at about 122mb/s using a ReleaseFast optimized build (not counting time spent reading from disk), which goes down to 96mb/s using ReleaseSafe and 27mb/s using Debug. Using ReleaseFast, my big, but simple stress test file of 123mb or 2,351,461 lines parses in just under a second, meaning 470,292 lines per second on my Intel i7-12700H CPU. But, how much faster could this be? That’s the whole reason I started this project, to be able to have a playground to reason about these kinds of questions, and get better at using the CPU at it’s full speed.

For now however, I’m busy playing around with other features like parsing a Typescript codebase to see if I can generate useful information (in YAML) about it’s architecture to visualize in this program. For those curious, the full parser is available as a Gist on GitHub [1], but I don’t yet have time to turn it into a proper package.

Are you interested in this kind of stuff? Feel free to reach out, and I may perform and write about performance experiments that you can follow along with :)